1. What is an Offline/Online workflow?

Various flavors of tape used for offline (and sometimes tape online!)

Like most things in the media industry, the term originates from the analog days of yore. We shot stuff on film. Now, cutting and splicing film, unless done really carefully, can easily cause damage. So we used workprints – film copies of the original negative – that could be manipulated without running the original stock. This would be the offline edit. Once the offline creative edit was completed with the workprint, the points at which the film was cut – the frame numbers – were then applied to the master footage – your online. This is also nowadays called the conform. Over the years, the workprint became videotape – 2”, ¾”, VHS, and in some cases, Laserdisc…but all of these formats still were still offlines for the film online.

Towards the late 80’s, there began a movement to replace the creative analog editing that had been done for the past few decades… with computers. The NLE – non-linear editor- was born.

And today, now that film is fading away, we apply the same paradigm to digital footage inside our computers. We perform creative edits with low-resolution versions of the high res master footage. And once our creative edit is done, we tell our NLE to look at the high res footage instead of the low.

2. What are some examples?

If we focus on how offline/online is used today, it invariably involves a discussion on codecs, and you know there are few things in this world I like more than a good codec discussion.

This means taking the OCM – original camera masters – and creating low res versions, often called proxies – for the creative team to edit with. The low res versions typically don’t look nearly as good as the original, but they take up less storage space and are easy for the computer to handle.

The quality of the proxies usually lies below what we call “broadcast quality”.

Broadcast quality is just as it sounds – the quality at which something will or will not be broadcast, per the standards of the broadcaster. And this is somewhat subjective. Over the years, “broadcast quality” has become a moving target, and the transition from SD to HD and HD to UHD has thrown a wrench in the works…. and this doesn’t even account for theatrical quality. Suffice it to say, ”broadcast quality” is essentially “what the distribution partner asks for”.

Generally speaking, any format you work with that is beneath broadcast quality is considered “offline”, and any resolution at or above broadcast quality – or, the original camera masters themselves – is considered your online version…. a vast majority of the time. High-end feature films with larger budgets can walk this line, but it’s a rarity.

Here are some places to start.

Common Offline |

Common Online |

| 15:1, 14:1, 10:1 (Avid SD) | 2:1, 1:1 |

| DNx36 -115* | DNx 145+ |

| ProRes 422 Proxy, LT | ProRes 422+ |

| DNxHR LB | DNxHR SQ – DNxHR444 |

| h.264 below ~2.5Mbps | Cineform: High or Better |

| OCM (Original Camera Masters) |

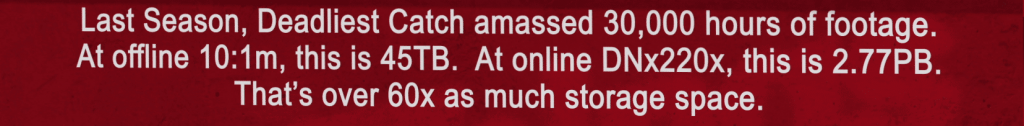

It’s important to remember offline proxy resolutions don’t need to match the resolutions of the originals. We can do a standard def offline for an HD or 4K master. Unscripted reality television shows often cut in standard def, such as the 15, 14, and 10:1 listed here. They are small in file size – which really adds up to storage savings with thousands of hours of footage… and the format is easy for computers to decode – especially when dealing with multicam.

Scripted shows with less footage, or feature films, may move to DNx for their offline. Bad Robot, for example, often works in DNx115 for movies like Star Trek. Deadpool and Gone Girl used ProRes. Less random footage and less mutlicam angles make for less media to have available…which means less storage.

Folks who are editing on mobile devices, or editing remotely, may choose to use even smaller files that can be transmitted easily over the net, like non-complex h.264 files. Web-based editing solutions like Media Composer Cloud or MediaCentral | UX or Forscene rely on this.

3. What are the benefits?

The benefits of an offline/online can be summed up in 1 word: cost.

Working with an uber high res master or the originals themselves requires a beefy computer to chomp through the wide array of formats we have out there today. Offline media is less CPU intensive, and thus inexpensive computers – or older ones – can be used.

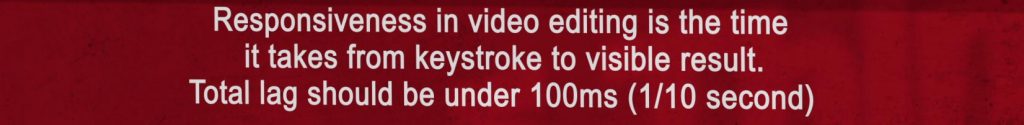

This directly relates to the concept of a “pleasurable editing experience”. If the editing application can easily process the footage, then the responsiveness of the app is increased. When you are gliding over your hotkeys, the app and media are responding. In terms of sanity and pure job enjoyment, a responsive system is worth its weight in gold.

Back to tech, we also don’t need a large amount of fast storage to house all of the high res content. If we utilize an offline workflow, we can spend less money on this nearline storage – and we can connect more machines to the same shared storage. Smaller files = less storage and smaller pipes.

Speaking of storage, offline workflows allow your systems to connect to the network and shared storage inexpensively. Instead of connecting via costly fibre or 10GigE connections and the expensive switches they need, you can use more common connection methods, like single GigE and less expensive switches.

If we’re looking at standalone systems, you now can use smaller sized drives, and not have to focus so much on a blazing fast RAID system. You can also connect via slower or older connection methods, instead of trying to get every last MB of speed from that Thunderbolt or USB 3 connection.

Offline also allows for portability. You can now cut on a laptop with a single attached drive – or even using the internal drive (in fact, that’s how I cut this series). You can temp in effects without having massive render times.

It’s also much faster to export preview copies. Now, while the quality might not be great, exports are traditionally faster – so it’s quicker to get the review and approve copies out to the creatives you’re collaborating with.

4. What are the downsides?

You can’t have sweet without the sour, and offline/online workflows are no exception. Expect a few hiccups if you’re looking into this for your next project.

Time. Creating low res versions of your master files requires transcoding. This can either be accomplished with your editing system, like Avid Media Composer, Adobe Premiere Pro, or Final Cut Pro X, or a 3rd party transcoding solution.

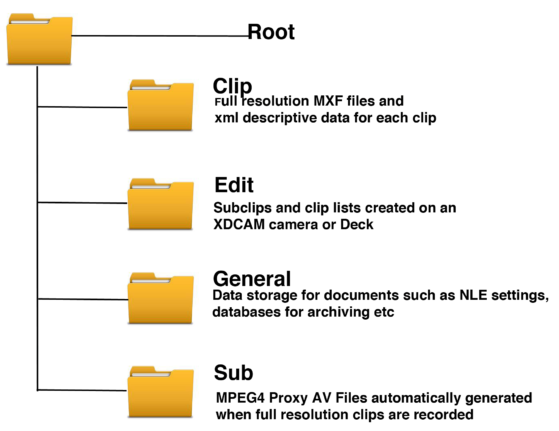

This causes several problems. 1) it ties up your editing machine with transcoding, so there isn’t a heck of a lot you can do while it’s chewing through the content, and 2) if you don’t use your NLE, then you’re using a 3rd party tool that needs to understand your camera originals. Many transcoders don’t understand card hierarchies or all camera codecs. If you’re tight on time, this can be a major drawback.

Speaking of transcoding, you need to ensure that you’re consistent with your frame rates and metadata when creating your proxies. Many NLEs conform from the offline to the online-based on basic metadata and timecode. If you don’t retain the metadata, or change the frame rate, the online conform tool may not be able to successfully find and relink to the original media.

If you decide to use multiple applications on your project, maybe Avid for creative editorial, and Resolve for your conform and color, expect to deal with conforming issues. What I mean by that is it’s very rare for NLE project files to be 100% interchangeable with every other NLE or grading application. This means relinking from the low res to the high res may be problematic. This is another reason that you should always test-drive your workflow before starting a project to ensure it’s gonna work.

Now, a low res version, if the visual quality is poor enough, may not be acceptable to edit against. Checking lip-sync, checking focus, or even checking any resizing you may have on the image can be difficult. Also, preview copies for review and approve will be visually poor, which some demanding clients aren’t thrilled with.

Lastly, with independent projects, funding can often run out towards the end of the post process. If you run out of money, and can’t afford to do your online, you’re now stuck with your masterpiece…in low res. This is why many productions pay upfront to enable themselves to edit with a broadcast quality version so they can always do a decent quality export.

5. Is there a hybrid?

Indeed there is! We call this a mezzanine file.

While offline requires a low res version to edit with, and a high res version to link back to, and going pure online allows you to use the camera originals, many productions go with a mezzanine file. A mezzanine file is a high-quality master from which everything is derived from. In post, this would be a high-res master that is not the camera original.

For example, lets say you shoot on a RED or an Arri camera. They produce some very high bandwidth files. What if you were to create broadcast quality versions from this footage –edit with that – and then output with it? You create high-quality masters that retain enough quality through the editorial and color process and are less compressed enough that most systems can edit them.

This means that you have the time savings by not having to deal with an offline/online workflow, and ensures you can output at any time. While you do need some time on the front end to create the mezzanine files prior to edit –keep in mind, this is at the beginning of the post process, where stress is traditionally lower than the stress at the end of a project – when you’re racing to conform and deal with the issues inherent to conforming.

……..

Have more offline and online concerns other than just these 5 questions? Ask me in the Comments section or track me down on social media. Also, please subscribe and share this tech goodness with the rest of your techie friends.

Until the next episode: learn more, do more – thanks for watching.

Comments