Generative A.I. is the big sexy right now. Specifically creating audio, stills, and videos using AI. What’s often overlooked, however, is how useful Analytical A.I. is. In the context of video analysis, it would involve facial or location recognition, logo detection, sentiment analysis, and speech-to-text, just to name a few. And those analytical tools are what we’ll focus on today.

We’ll start with small tools and then go big with team and facility tools. But I will sneak in some Generative AI here and there for you to play with.

1. StoryToolkitAI

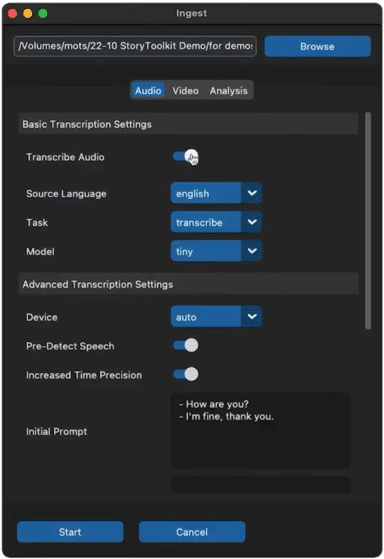

StoryToolKitAI can transcribe audio and index video

Welcome to the forefront of post-production evolution with StoryToolKitAI, a brainchild of Octavian Mots. And with an epic name like Octavian, you’d expect something grand.

He understood the assignment.

StoryToolKitAI transforms how you interact with your own local media. Sure, it handles the tasks we’ve come to expect from A.I. tools that work with media like speech-to-text transcription.

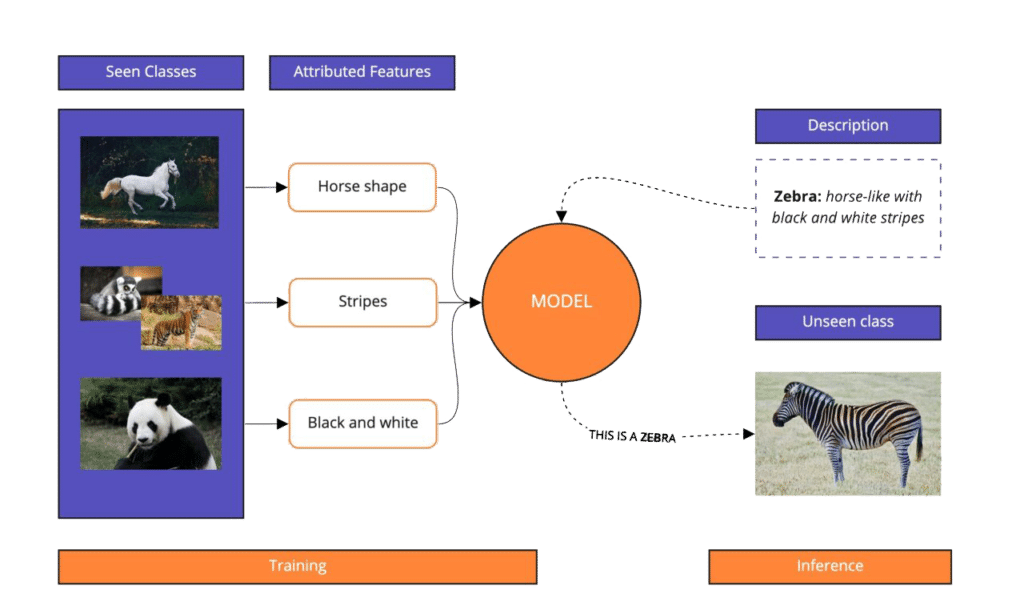

But it also leverages zero-shot learning for your media. “What’s zero shot learning?” you ask.

Imagine an AI that can understand and execute tasks that it was never explicitly trained for. StoryToolKitAI with zero-shot learning is like trying to play charades. It somehow gets things right the first time. While I’m still standing there making random gestures, hoping someone will figure out that I’m trying to act out Jurassic Park and not just practicing my T-Rex impersonation for Halloween.

How Zero-Shot Learning Works. Via Modular.ai.

Powered by the goodness of GPT, StoryToolKitAI isn’t just a tool. It’s a conversational partner. You can use it to ask detailed questions about your index content, just like you would talk with chat GPT. And for you DaVinci Resolve users out there, StoryToolkitAI integrates with Resolve Studio. However, remember Resolve Studio is the paid version, not the free one.

Diving Even Nerdier: StoryToolkit AI employs various open-source technologies, including the RN50x4 CLIP model for zero-shot recognition. One of my favorite aspects of StoryToolkit is that it runs locally. You get privacy, and with ongoing development, the future holds endless possibilities.

Now, imagine wanting newer or even bespoke analytical A.I. models tailored for your specific clients or projects. The power to choose and customize A.I. models. Well, who does it like playing God with a bunch of zeros and ones, right? Lastly, StoryToolKitAI is passionately open-source. Octavian is committed to keeping this project accessible and free for everyone. To this end, you can visit their Patreon page to support ongoing development efforts (I do!)

On a larger scale, and on a personal note, I believe the architecture here is a blueprint for how things should be done in the future. That is, media processing should be done by an A.I. model – with its transparent practices – of your choosing and can process media independently of your creative software.

A potential architecture for AI implementation for Creatives

Or better yet, tie this into a video editing software’s plug-in structure, and then you have a complete media analysis tool that’s local and using the model that you choose.

2. Twelve Labs

Have you ever heard of Twelve Labs?

Don’t confuse Twelve Labs with Eleven Labs, The A.I. Voice synthesis company.

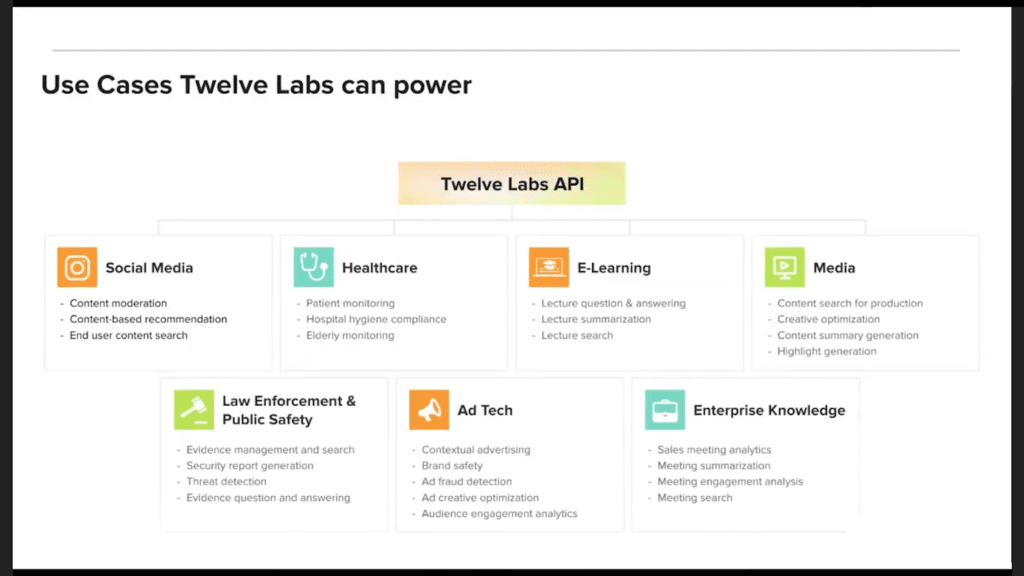

Twelve Labs is another interesting solution that I think is poised to blow up… or at least be acquired. While many analytical A.I. indexing solutions search for content based on literal keywords, what if you could perform a semantic search? That is, using a search engine that understands words from the searcher’s intent and their search context.

This type of search is intended to improve the quality of search results, Let’s say here in the U.S., we wanted to search for the term “knob”.

Other English speakers may be searching for something completely different.

That may not actually be the best way to illustrate this. Let’s try something different.

“And in order to do this, you would need to be able to understand video the way a human understands video. What we mean by that is not only considering the visual aspect of video and the audio component but the relationship between those two and how it evolves over time, because context matters the most. ”

Travis Couture

Founding Solutions Architect

Twelve Labs

Right now, Twelve Labs has a free plan which is hosted by Twelve Labs. It’s fairly generous. However, if you want to take deeper advantage of their platform, they also have a developer plan. Twelve Labs tech can be used for tasks like ad insertion or even content moderation like figuring out which videos featuring running water depict natural scenes like rivers and waterfalls or manmade objects like faucets and showers.

Twelve Labs use cases

With no insider info, I’d wager that Twelve Labs will be acquired as the tech is too good not to be rolled into a more complete platform.

3. Curio Anywhere

During the Production of this episode, our friends at Wasabi have acquired the Curio product.

Next up, we have Curio Anywhere by GrayMeta, and it’s one of the first complete analytical A.I. solutions for media facilities. Now, this isn’t just a tagging tool. It’s a pioneering approach to using A.I. for indexing and tagging your content, using their localized models, and if you so choose, cloud models, too.

In addition to all of this, there’s also a twist. See, traditionally analytical A.I. generated metadata can drown you in data and options and choices, overloading and overwhelming you. GrayMeta’s answer is a user-friendly interface that simplifies the search and audition process right in your web browser. This means briefly being able to see what types of search results are present in your library across all of the models.

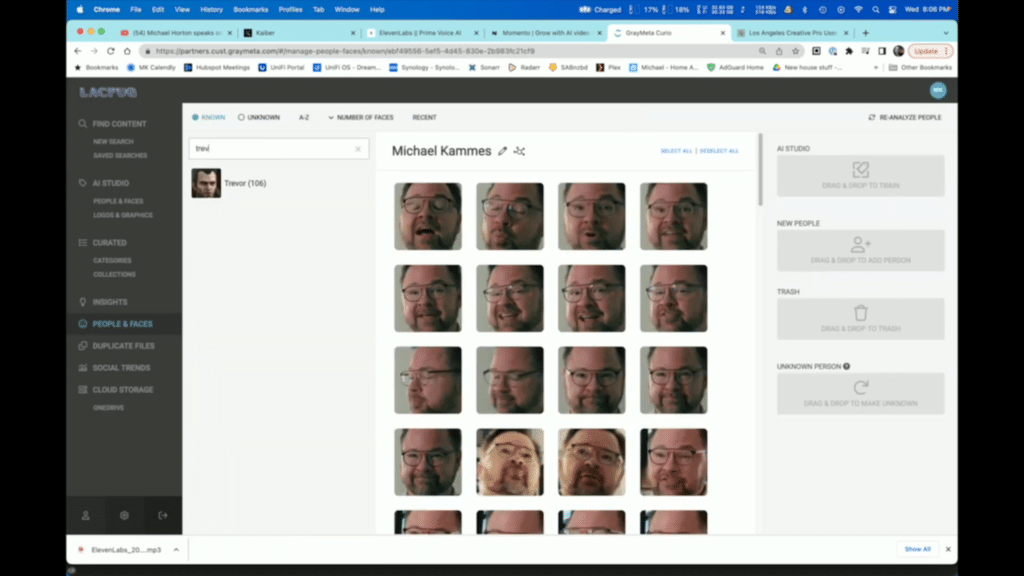

Is it a spoken word? Maybe it’s the face of someone you’re looking for. Perhaps it’s a logo. And for you Adobe Premiere users out there, you can access all of these features right within Premiere Pro via GrayMeta’s Curio Anywhere Panel Extension. Now let’s talk customization. Curio Anywhere allows you to refine models to recognize specific faces or objects.

Curio Anywhere Face Training Interface

Imagine the possibilities for your projects without days of training a model to find that person. Connectivity is key and Curio Anywhere nails it, whether it’s cloud storage or local data, Curio Anywhere has got you covered. And it’s not just about media files either. Documents are also part of the package. Here’s a major win: Curio Anywhere’s models are developed in-house by GrayMeta, and this means no excessive reliance on costly third-party analytical services.

But if you do prefer using those services, don’t worry Curio Anywhere supports those too, via API. For those of you eager to delve deeper into GrayMeta and their vision, I had a fascinating conversation with Aaron Edell, President and CEO of GrayMeta. We talk about a lot of things, including Curio Anywhere, making coffee, and if the robots will take us over.

4. CodeProject.AI Server

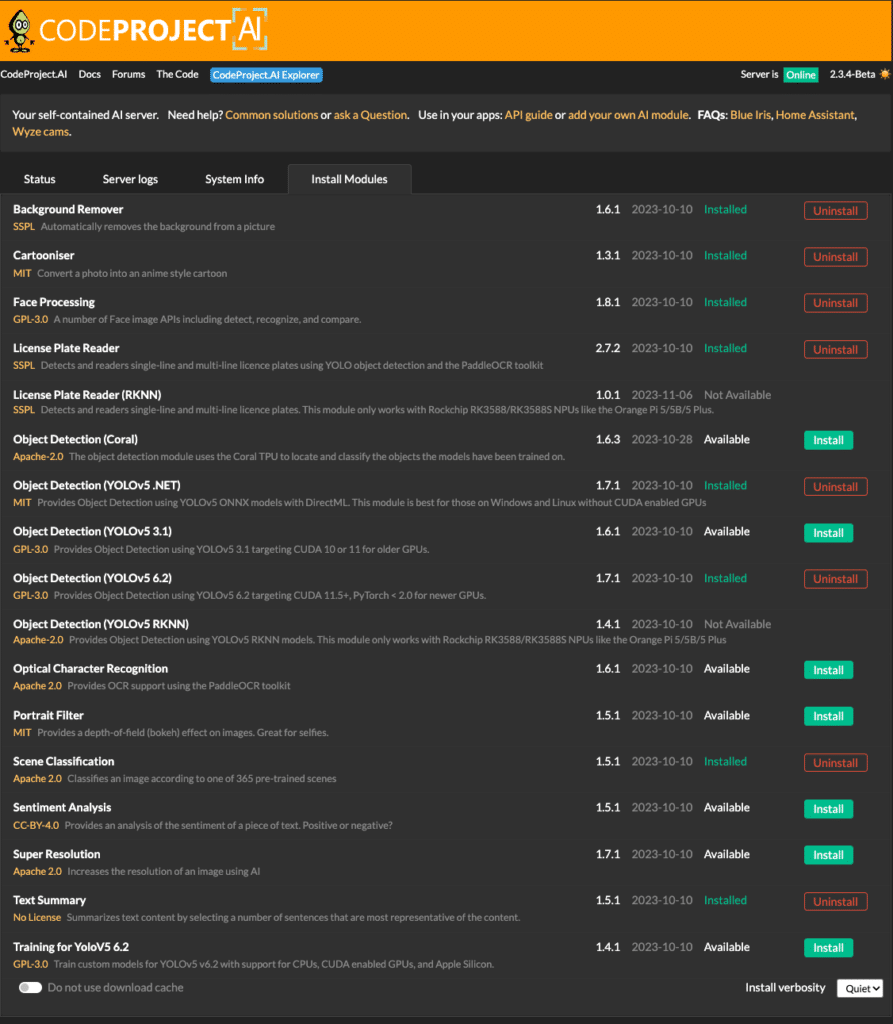

It’s time to get a little bit more low level with CodeProject.AI Server, which handles both analytical and generative A.I. Imagine CodeProject.AI Server as Batman’s utility belt.”

Each gadget and tool on the belt represents a different analytical or generative A.I. function designed for specific tasks. And just like Batman has a tool for just about any challenge, CodeProject.AI Server offers a variety of A.I. tools that can be selectively deployed and integrated into your systems, all without the hassle of cloud dependencies. It includes object and face detection, scene recognition, text and license plate reading, and for funsies, even the transformation of faces into anime-style cartoons.

CodeProject.AI Server Modules

Additionally, it can generate text summaries and perform automatic background removal from images. Now you’re probably wondering, “how does this integrate into my facility or my workflow?” The server offers a straightforward HTTP REST API. For instance, integrating scene detection in your app is as simple as making a JavaScript call to the server’s API. This makes it a bit more universal than a proprietary standalone AI framework.

It’s also self-hosted, open source, and can be used on any platform, and in any language. It also allows for extensive customization and the addition of new modules to suit your specific needs. This flexibility means it’s adaptable to a wide range of applications from personal projects to enterprise solutions. The server is designed with developers in mind.

5. Pinokio

Pinokio is a playground for you to experiment with the latest and greatest in Generative AI. It unifies many of the disparate repos on GitHub. But let’s back up a moment. You’ve heard of GitHub, right? Most folks in the post-production space don’t spend much time on GitHub.

It’s what developers use to collaborate and share code for almost any project you can think of. GitHub is where cool indie stuff like A.I. starts before it goes mainstream. Yes, you too can be a code hipster by using Pinokio and GitHub and you can experiment with various A.I. services before they go mainstream and become soooo yesterday. As you can imagine, if GitHub is for programmers, how can non-coding types use it?

Pinokio is a self-contained browser that allows you to install and run various analytical and generative AI applications and models without knowing how to code. Pinokio does this by taking GitHub code repositories -called repos – and automating the complex setups of terminals, git clones, and environmental settings. With Pinokio, it’s all about easy one-click installation and deployment, all within its web browser.

Diving into Pinoki ‘s capabilities, there’s already a list chock full of diverse A.I. applications from image manipulation with Stable Diffusion and FaceFusion to voice cloning and A.I. generated videos with tools like K and Animate Diff. The platform covers a broad spectrum of AI tools. Pinokio helps to democratize access to A.I. tools by combining ease of use with a growing list of modules as it continues to grow in various sectors.

Platforms like Pinokio are vital in empowering users to explore and leverage AI’s full potential. The cool part is that these models are constantly being developed and refined by the community. Plus, since it runs locally and it’s free, you can learn and experiment without being charged per revision.

Every week there are more analytical and generative A.I. tools being developed and pushed to market.

If I missed one, let me know what they are and what they do in the comments section below or hit me up online. Subscribing and sharing would be greatly appreciated.

5 THINGS is also available as a video or audio-only podcast, so search for it on your podcast platform du jour. Look for the red logo!

![]()

Until the next episode: learn more, do more.

Like early, share often, and don’t forget to subscribe.

PSA: This is not a paid promotion.

First video of the new year. Love the focus on useful AI tools for video and not the flavor of the month thing. Happy 2024 Michael!

Thanks for the comments and thanks for watching, Jason. I appreciate it!